Last update November 29, 2024

In this article, I’ll describe my first attempt at deploying a whole solution to Microsoft Azure. For this test, I used an application that was not meant for a cloud deployment and refactored it as necessary to make it work.

I’ve explained the application structure I would like to put in place in a first introductory article about this Cloud project. As a summary, the solution I want to implement consists of a front-end SPA, an API manager, a back-end application, a database and a message queue.

My goal is to try to deploy the same application on different Cloud providers (Azure, AWS, GCP and OCI) and compare the difficulty, costs, time spent… This article is my first shot with Azure. I started with Microsoft Azure because that’s the Cloud provider I know the most.

The effort and the time

I have a good theoretical overview of the Microsoft Azure services, but it was my first time actually creating app and services, configuring and deploying them.

In this post, I’m documenting how if felt, the time spent, effort, documentation troubles… per service. Just keep in mind that it was my first attempt (including looking for solutions, trying stuff, failing…) and that a lot of things would be automated in a professional environment, while I did it manually.

On the other hand, there would be a lot more to do for a production-grade app (I didn’t dig that much into security for example, since I would just scrap my deployment after this little experiment).

For this attempt, I spent about 14 hours deploying the whole solution, including reading documentation, searching for solution, debugging, starting the wrong way and having to start again…

Deploying the backend API

Azure App service is ready to use with Java, among other SDKs like node.js, C#, Ruby… I made the mistake to start with the backend instead of with the database, which made me waste a little time.

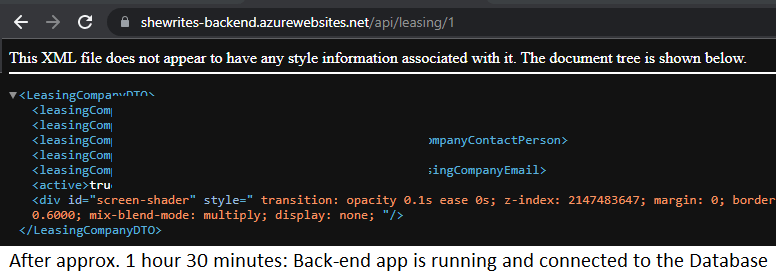

Even so, the deployment (creation of the service, clone of the code, build, failure to deploy because of the wrong database connection string, changes in the code to set the new database connection string, deployment, and including the time waiting for builds and deployments…) of the backend took less than one hour.

- Service used: Microsoft Azure App Service

- Total time: 45 minutes

- Quality of documentation: pretty decent as the service is really easy to use, but the fact that I’ve been developing in Java for years did help a lot

- Difficulty of the operation: Easy Peazy

Deploying the database server

The documentation on PostgreSQL for Azure is really limited to “how to set up a PostgreSQL server on Azure” but doesn’t cover additional operations like creating a database for example, it doesn’t guide you through the next steps.

On could argue that, as they’re not owner of the PostgreSQL technology, it’s not their responsibility to document it, but when you deploy a Java app on App Services I feel like they’re covering more Java how-to for example.

Creating the database server (including parameterizing and deployment) takes 10 minutes.

- Service used: Microsoft Azure SQL server for PostgreSQL

- Total time: 1 hour, including 45 minutes looking for the exact commands to use to create my database and apply a backup. When you know how to do this, it probably takes 15 to 20 minutes.

- Quality of documentation: Okay

- Difficulty of the operation: Easy if you know how to do it, headachy when you have to figure it out

How to create your database on the Azure database for PostgreSQL server

From your local computer, you can connect in command lines to the master database on the server (that’s called “postgres”). You’ll find the connection information to your database server on the Azure portal. You have to make sure you allowed public connections to the server, and installed the SSL certificate on your machine.

psql “host=YOURSERVERNAME.postgres.database.azure.com port=5432 dbname=postgres user=YOURUSERNAME password=YOURUSERPASSWORD sslmode=require”

From there, you can create you database(s) as you would usually do. You may also connect to the server with a GUI like PGadmin.

To import data (restore a dump) from your local database to the database on Azure, you have to make a pg_dump of the local database, then:

psql.exe -U YOURUSERNAME -h YOURSERVERNAME.postgres.database.azure.com -p 5432 -d YOURTARGETDATABASEONTHESERVER -f YOUR\LOCAL\PATH\TO\DUMP\FILE\YOURLOCALDUMPFILE.sql

Some references:

- How to backup and restore PostgreSQL databases in Windows 7? (StackOverflow)

- Restore a Postgre backup file using the command line? (StackOverflow)

Deploying the frontend web application

I expected the deployment of the Angular frontend app to be as easy as the deployment of the backend app. To deploy an app with Java or node.js, I just figured out Azure is a plain remote server on which you have to clone your code, build and run your app. But when I tried installing Angular, it just didn’t work as easily.

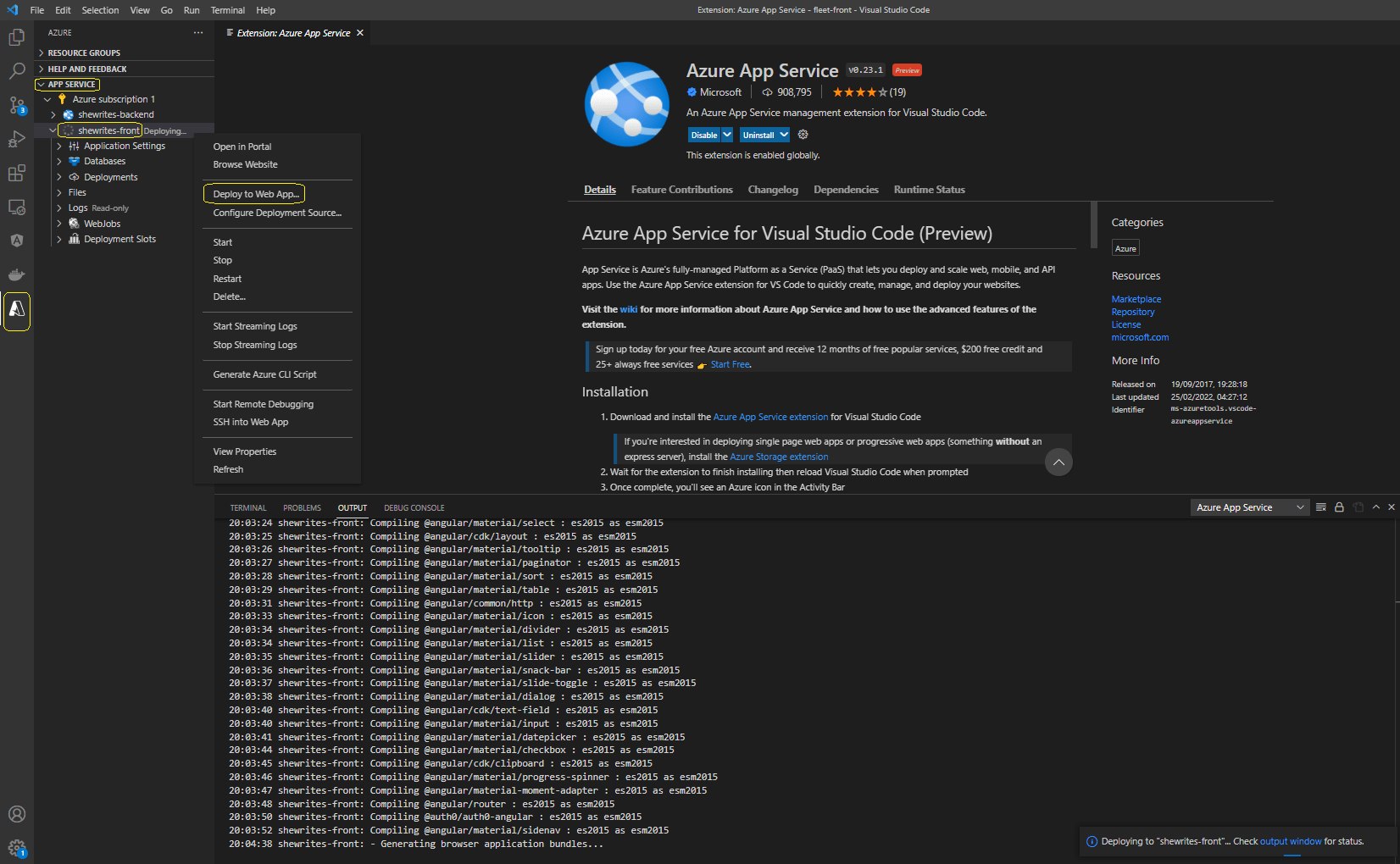

I couldn’t find a straightforward tutorial in the Azure documentation. After some research on blogs, I found two options: deploying with GitHub actions, which was going out of the scope I wanted to explore for this test, or deploying via Visual Studio Code and the Azure App service plugin.

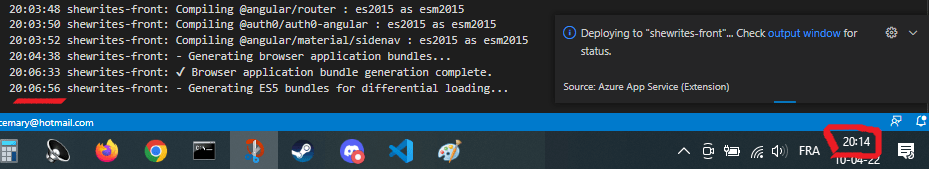

I chose the second option, which looked promising: the connection to Azure was smooth, so was the launch of the deployment of my code to the previously created app service. Everything was great until it got stuck on “Generating ES5 bundles…” message in the logs for 15 minutes.

The wheel was still turning, the status was still “deploying”, but I couldn’t see anything happen. I finally tried to stop the deployment and restart from the beginning, because I had no clue what happened.

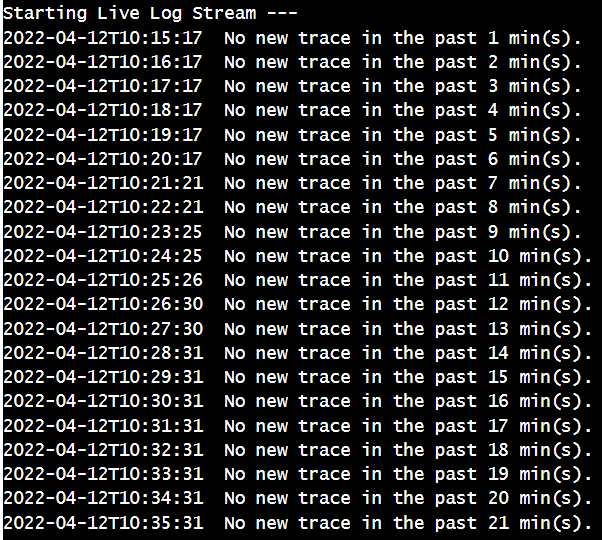

After 1 hour 15 minutes I had created my service but still wasn’t able to deploy my Angular app. And I was facing the issue I already had when I first integrated Azure services in an app: WHERE ARE THE LOGS?

Azure adds an extra layer of abstraction that’s supposed to ease your tasks, but when your case doesn’t work perfectly as described in the tutorial, you have to spend time finding logs where you can. That means, digging into the logging and monitoring system of Azure and gathering information from various places. I mean, they made a deployment plugin for Visual Studio Code, why wouldn’t they just show the error logs directly in VSC at failure?

As Ed Sheeran would sing it, My, my, my, my, oh give me looooogs, my, my, my, my…

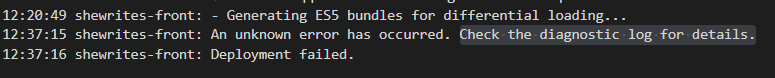

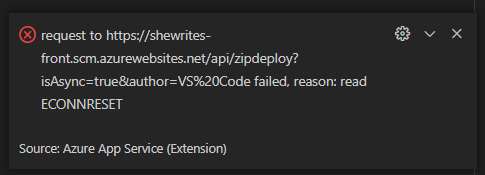

After a few attempts at reproducing the same steps with minor changes, I finally got an error message, something like “request to (app variables)VS%20Code failed, reason: read ECONNRESET“. I tried elaborated solutions (like this and this) to solve the issue, first with Visual Studio Code.

Note to self and to you: disable auto-update on Visual Studio Code, always. Downgrading to version 1.65.2 indeed took me one step further, just enough to encounter the next issue (so, in fact, one bug further…). Still without logs.

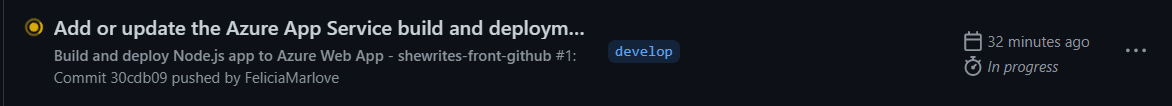

I was clueless, I decided to give a try to the automated deployment from GitHub Actions. It was out of my initial scope, but it was also the only way of deploying Angular on Azure that was more or less referenced by Microsoft. I faced a problem during karma tests automatically ran by GitHub. Again, I could see something failed in the logs but GitHub seemed like it was busy with the job.

After almost half an hour I cancelled the workflow, investigated the error (Chrome failed 2 times (cannot start). Giving up.), found information about karma tests failing during GitHub Actions build, pushed the changes (see below, in karma.conf.js), relaunched the workflow…

browsers: ['ChromeHeadless']

And I could finally see real application logs! And I discovered that, for some reason, GitHub Actions had created a deployment manifest to deploy with Docker, which was none of my intent.

I could have changed the manifest, but as I was unhappy feeling forced to use the GitHub Actions method, I went back to my research to manage the deployment from Visual Studio Code.

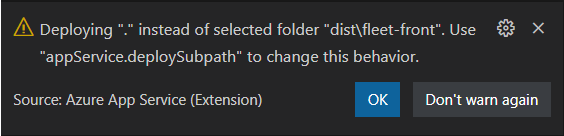

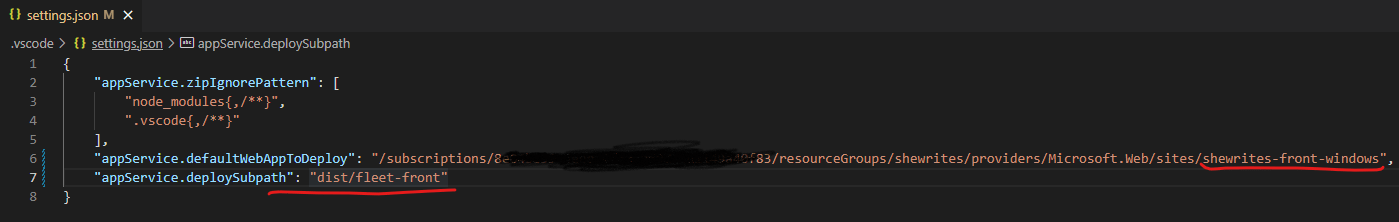

Reading this tutorial, I found out the automatically generated deployment file from the Azure Web App plugin for Visual Studio Code (both products of Microsoft…) doesn’t auto-generate a correct path for the “package” parameter in the settings.json file.

I got a new error, read that tutorial, and caught that sentence:

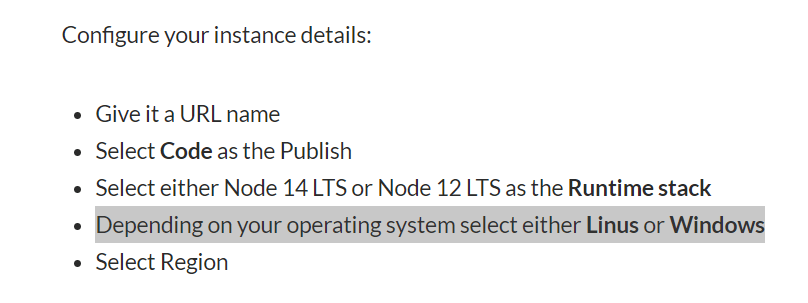

Having no other idea, I tried creating a Windows Web App service instead of a Linux one. Then, finally, in less than 5 minutes from creation to deployment, my frontend app was live!

So, great lesson learned: when you build an app with npm, it might create files that depend on your OS. The best way to avoid this is to run npm install on the deployment server, but in this case I was copy/pasting my local build (on a Windows machine) and needed the same operating system to run it.

I didn’t have that problem with the Java app, because 🔥Java runs everywhere🔥.

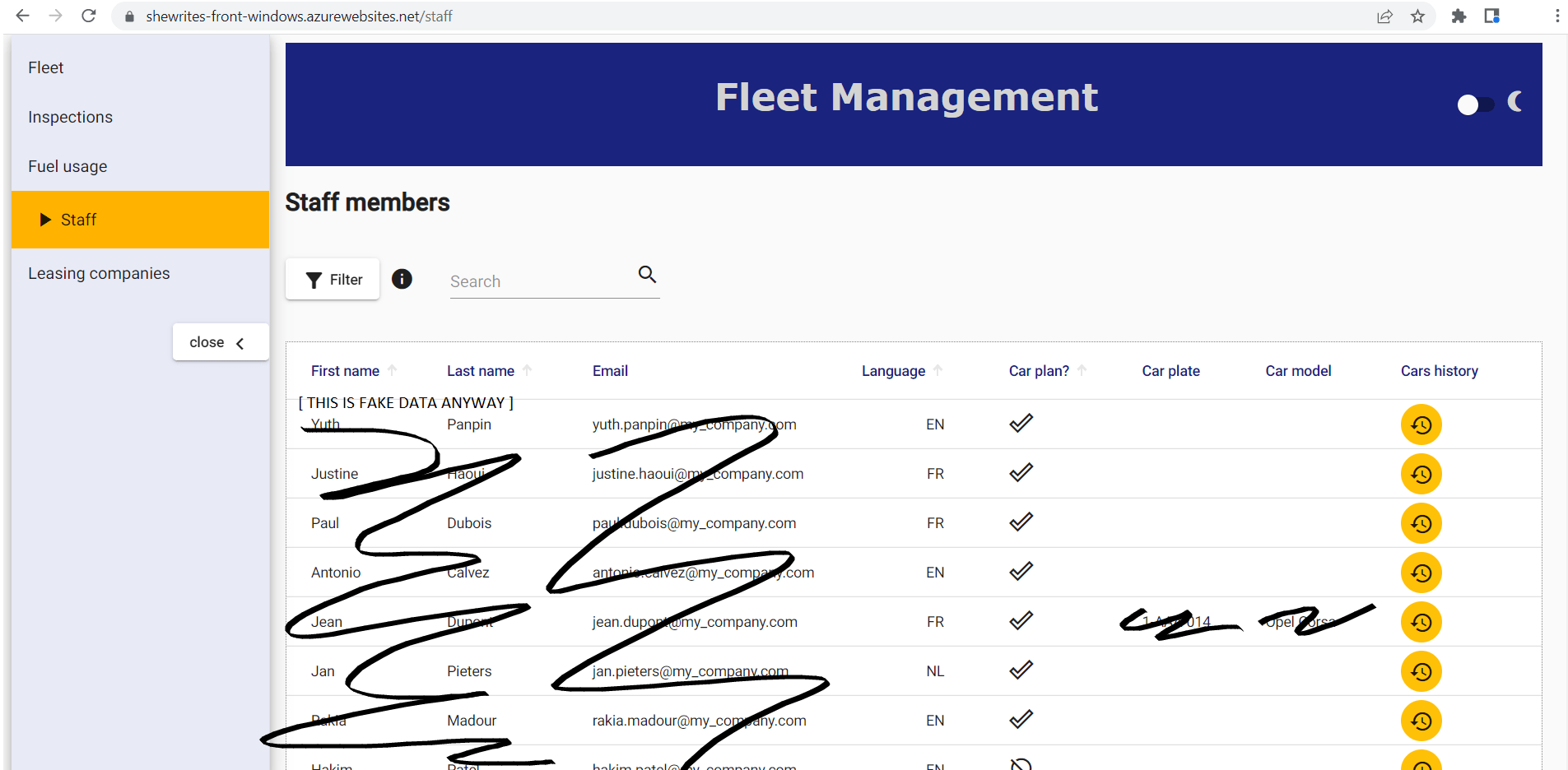

Just had to change the CORS policy in my backend app to the URL of my newly deployed frontend app, restart the service (it takes less than a second), and tadaaa! So much easier when you know what to do and how…

- Service used: Microsoft Azure App Service

- Total time: 3 to 4 hours including searching for the solution and trying fixes to deploy directly on the remote server or from Visual Studio Code + 1h30 trying the GitHub Actions solution and debugging + 30 minutes actually debugging things I understood with real clues + 5 minutes for actual deployment once everything was set up

- Quality of documentation: There is just none about Angular in Azure official doc

- Difficulty of the operation: Might make you want to change job the first time you try it

Securing the communication between backend and frontend apps with APIM (API Management)

One of the tools I was most excited about is APIM, because it’s said to offer a solution to handle many security issues without changing your app code. Among others, it should allow you to easily set authentication and authorization to the backend endpoints. The security layer is still one of my weakest points (and honestly, I think there are things more interesting to develop).

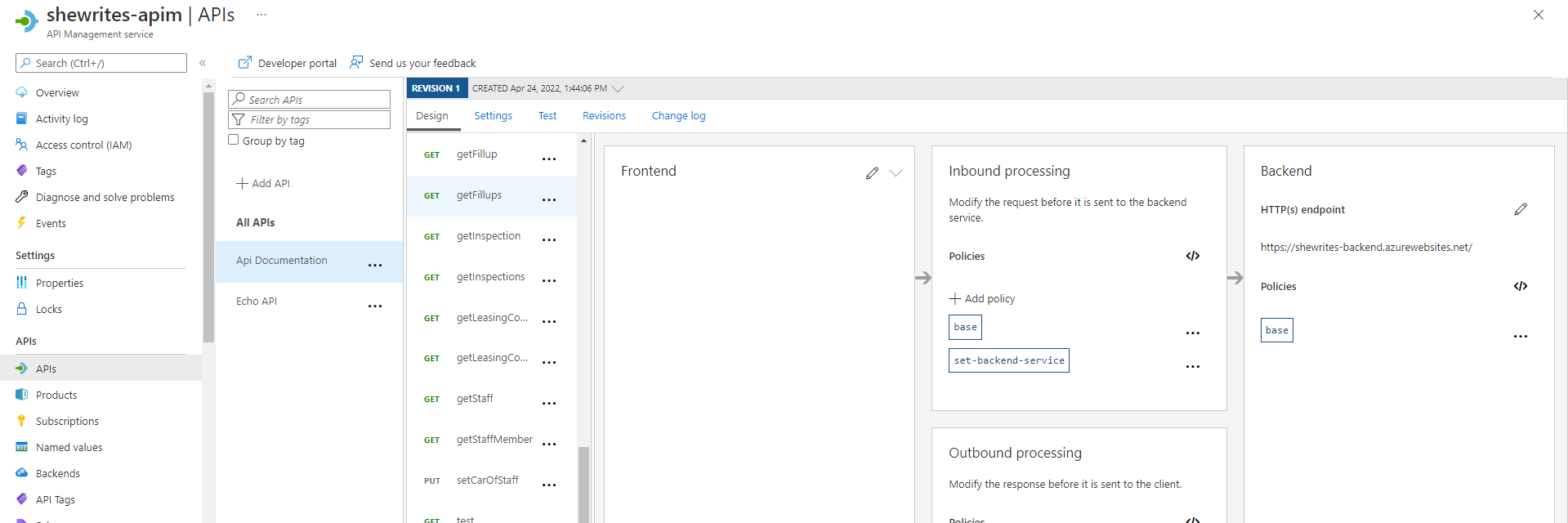

I spent about an hour trying to create an APIM service: first directly through my backend app, where it went super fast but I couldn’t make it work, then via the APIM service menu, but the deployment was taking so long (15 minutes seemed pretty long to me) that I thought the service crashed, and finally, third time’s the charm, I created it again via the APIM service and let it deploy during about 30 minutes (it was not bugged, it’s just actually pretty long).

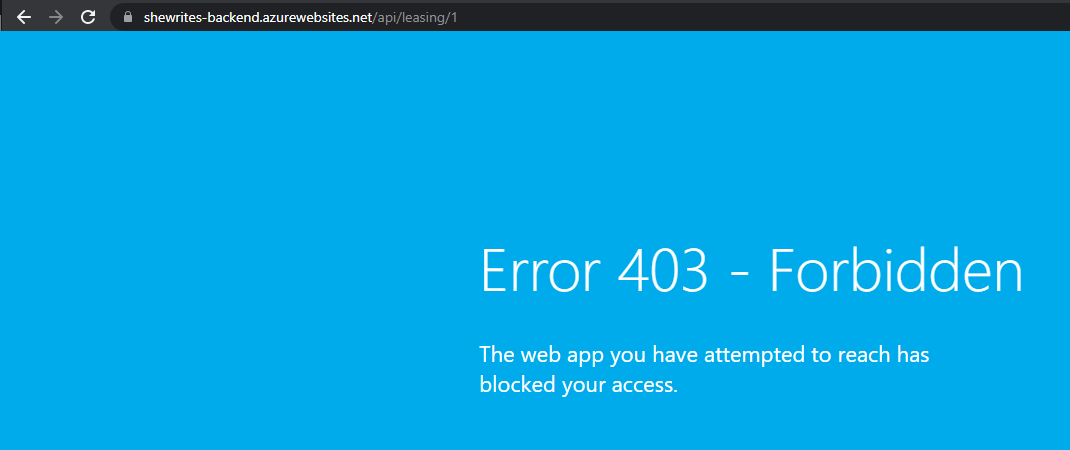

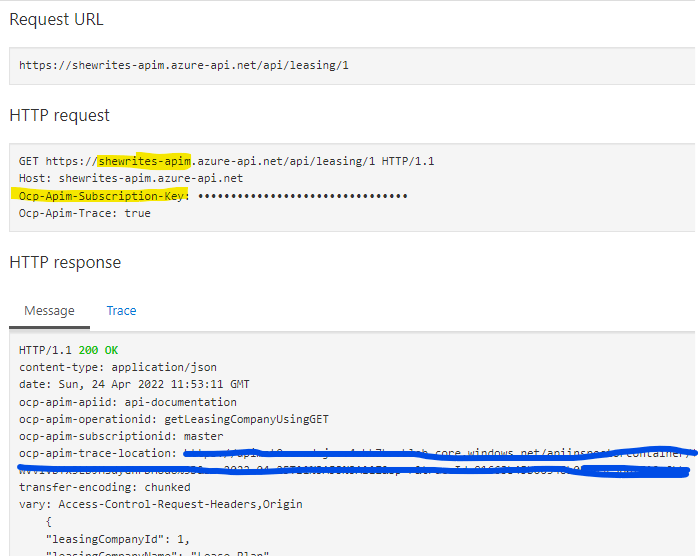

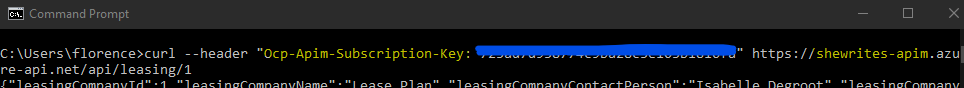

I managed to curl the API via the APIM URL. It then took me 5 minutes to deny all accesses to my backend app except through the APIM (the Azure documentation was pretty simple and it worked at first try).

At that stage, the frontend app couldn’t communicate directly with the backend anymore, but that was exactly the point.

I wanted the frontend app to communicate with the backend through the APIM, so I changed the backend URL to use the one from the APIM. But the point was to add some security in here. First step would be to use the “subscription key”, a key that’s necessary to make request to the APIM.

Naïve me thought it would be easy to retrieve a secret from the Key Vault, on my Azure subscription, and use it in my frontend app, on my Azure subscription, in order to dynamically call my API with the necessary headers…

Naïve of me indeed, because it seems no such thing exists, and if securing the backend app behind an APIM is pretty easy without even changing the code, the frontend-to-API part is far from being codeless. And again, it’s not documented as is, you have to dig in the doc in hope to find anything that would put you on the right path, read blog articles from 3 years ago when the Azure interface was completely different, and so on. In the end, I didn’t find a satisfying solution for the frontend part, I had to use the key in the header in the code, which is a disgusting and unsecure solution.

My goal at first was to use Azure AD, but as I was already stuck on the supposed-to-be-easy solution, and running out of time before the end of my trial period, I didn’t give it a try.

- Service used: Microsoft Azure API Management service

- Total time: about 2 hours to create and deploy the APIM and have it route requests to the backend app, about 2 hours to search and try solutions to establish the communication between frontend and API (but with a far-from-ideal solution)

- Quality of documentation: Decent

- Difficulty of the operation: Pretty easy for the backend/APIM, a failure for the front-to-API communication

Deploying a message queue service and functions

I decided to add a message queue service to the whole thing. It just didn’t appear to me that I would have to code an app to send the messages to the queue and wouldn’t be able to just send messages from my local Postman*… so I came with a slightly changed structure for my app: a function receives the incoming http calls, sends the content to a message queue storage, that triggers another function to make the http call to my app.

As is, it doesn’t serve any concrete purpose, that was purely for practice. It would make sense if the data needed transformation or validation (use of a function), or for batch executions or high number of concurrent requests (use of a queue), for example. Those things can also be done programmatically, but using Azure services allows not to modify an existing code base to add those behaviors.

Creating the queue storage was simple and fast, as it’s just a storage type inside your storage account. Creating the functions seemed pretty simple on paper (I mean, in the Azure documentation), but I faced a few surprises, like the fact that there is no actual “HTTP output binding” (it only exists to answer an HTTP triggered function).

I chose to create my functions in JavaScript, which is not my favorite language (That’s a euphemism. I hate JavaScript more than any other language I’ve ever tried. And I tried PHP at school.), because you can code it and test it directly on the portal (which is not the case if you choose Java, which has to be built and tested on your local machine).

I spent a fair amount of my time trying to write an http request from inside the Azure function in JavaScript (I posted the requests that actually worked on my GitHub. Shoutout to all the people who posted answers about that problem on StackOverflow: I managed to do it by putting pieces of all the answers altogether.).

In the end, including the JS struggles, it took me 2 hours to create the queue storage and the two functions and manage to make an end-to-end flow: my http-triggered function was callable from anywhere (with Postman on my local machine), it created a new message in the queue, which itself triggered another function which would make an http request to my backend app, via the APIM.

- Services used: Queue storage and Function app

- Total time: 10 minutes to create the queue storage (including the time to remember in which service it was) and a little less than 2 hours to create the 2 functions and manage to make them work end-to-end.

- Quality of documentation: Queue storage is the most basic queuing service of Azure, so documentation is not necessary for creation and parameterization. About functions, meh… it seems really clear on paper, in the facts I had to pick a lot of pieces of information from many documentation pages, and search for more on StackOverflow.

- Difficulty of the operation: Pretty easy still.

* well, after all I found some documentation and it seems it’s possible to post messages with a http request, but I was already done with my solution and the direct calls from Postman didn’t seem to work anyway…

Where I failed

I was really hoping to find in Azure an easy solution to implement security between services. I thought it would be easy to handle secrets and keys and manage accesses of services to each other. It only seems to be the case with APIM (which is an expensive service). I couldn’t find possibilities to directly connect other services with Azure KeyVault for example.

If I had to implement a solid security layer between my applications and services, I think I could easily multiply the time spent by 2. The problem is a mix of incomplete or inconsistent documentation, and my limited knowledge in security and infrastructure.

As a conclusion, it’s thus interesting to note that deploying an app on Azure is not just a point-and-click matter, it still requires some skills and knowledge in development and infrastructure (might seem silly to write that, but I often feel like cloud providers make it sound like anyone can do anything because it’s soooo easy with cloud services).

The cost

It’s important to take into account that:

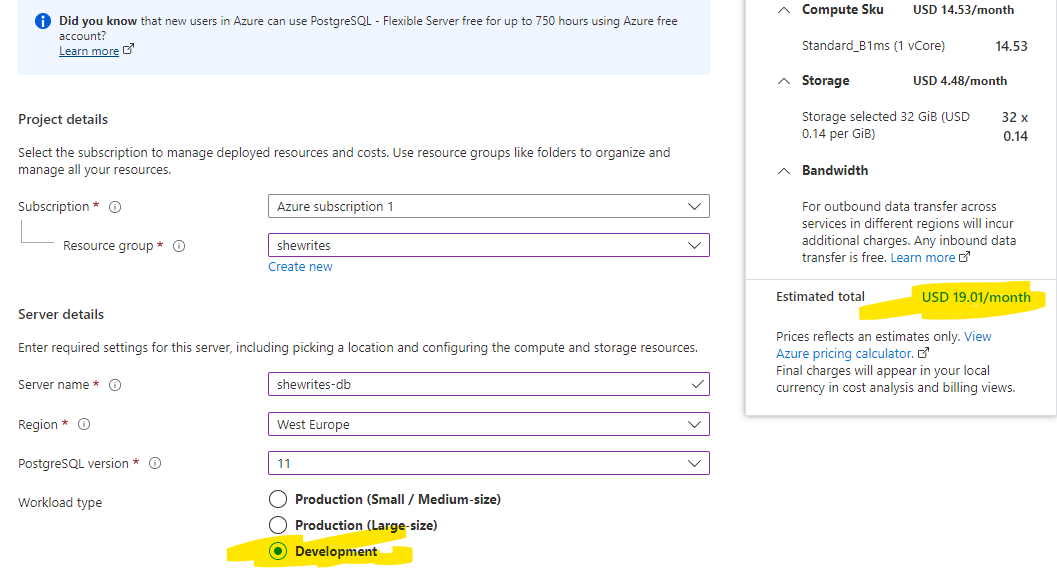

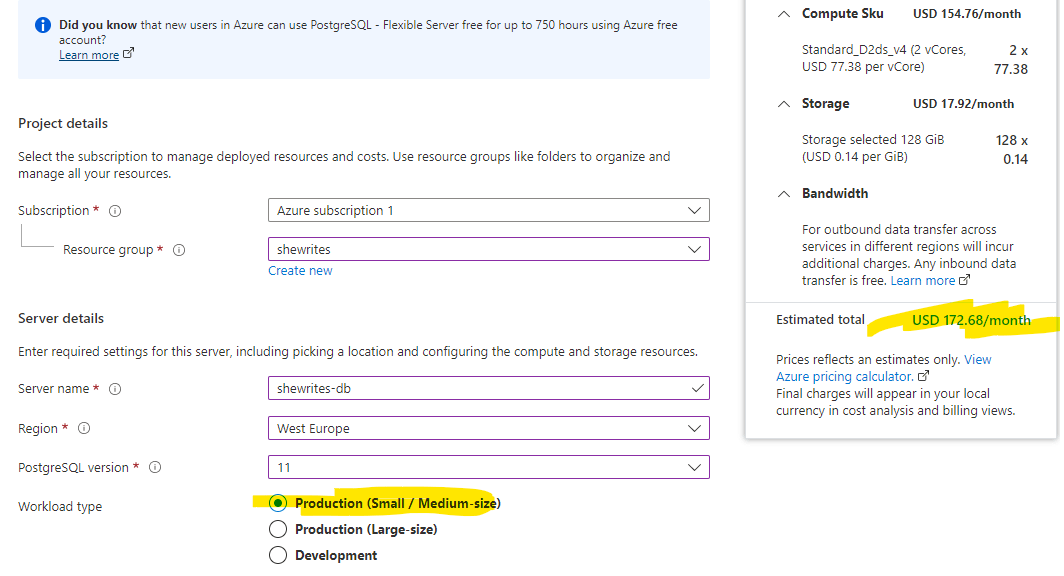

- I chose development* tier most of the time (because I had a limited budget allowed by Azure in their try-for-free offer and I had no idea how much I would need for my tests),

- my application wasn’t used by tens, hundreds or thousands of users (only me in fact),

- all services were not running 24/7, I chose services that start up on trigger when possible.

In production environments, higher costs are to be expected. The difference in price is pretty big.

* development tier is a cheaper tier for development/test environments, thus cheaper than production environments (sometimes even free), but without SLAs, with limited options and other limitations.

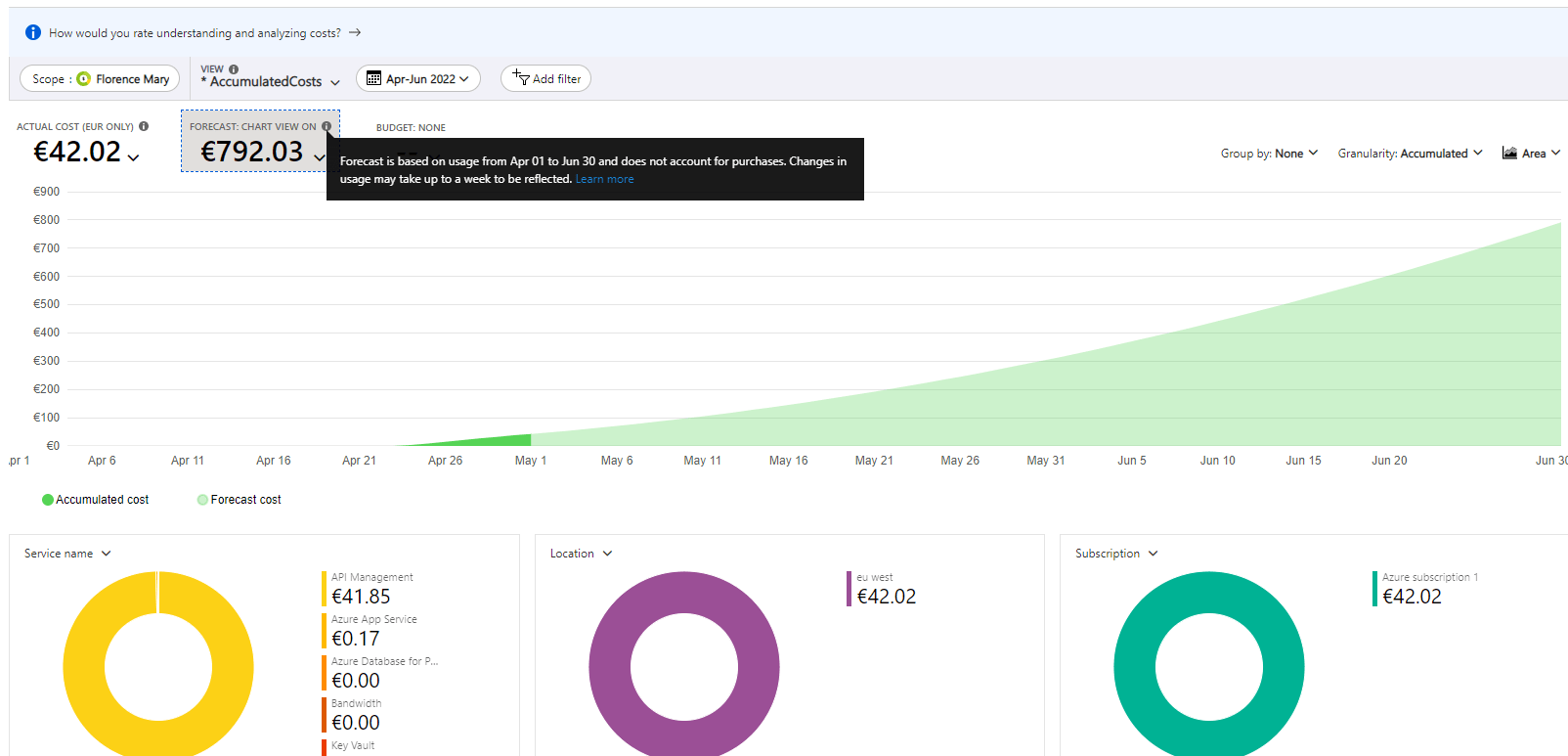

My current solution has a cost of approximately €42/month, but the forecast is about €792/month (forecast is based on a projection, I guess it takes into account the typical usage for this kind of solution). For my current trial usage, out of €42.02, €41.85 are linked to the usage of APIM! The remaining cents are from Azure App Service.

Azure ARM templates

The ARM templates of the implemented solution are available on my GiHub account for reference.